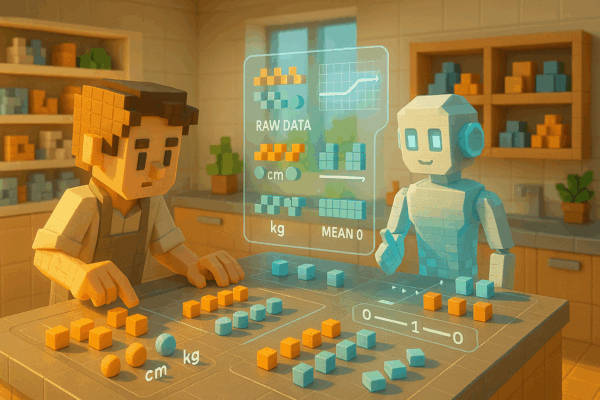

Episode 18: What Is “Normalization” in AI? The Unsung but Essential Step That Prepares Data for Accurate Learning

Normalization is a crucial process in AI and machine learning that involves adjusting the scale and units of data to ensure consistency. It serves as a foundational step that enables fair and accurate analysis by eliminating imbalances in the data.