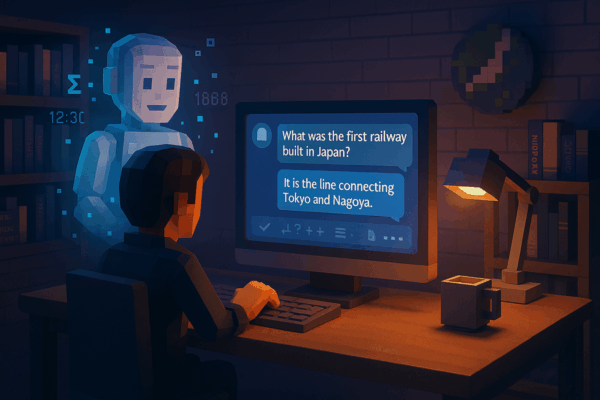

Episode 50: Why Does AI Make Confident Mistakes? Understanding the Truth Behind “Hallucination”

The phenomenon where AI confidently generates incorrect information, known as “hallucination,” highlights the limitations of language-based AI systems. Since these models learn patterns of words rather than actual knowledge, they can sometimes produce content that sounds plausible but is factually wrong. This issue is especially critical in fields like medicine and law, where accuracy is essential.